Technical Documentation

Designed for technical buyers, engineering teams, and partners who want to understand how Ravando builds, deploys, and maintains AI automation systems.

1. Platform & Technology Stack

What platforms do you build on?

We mainly use workflow orchestrated automation platforms combined with API-first integrations for flexibility and maintainability:

Primary Automation Platforms

- • Make.com: Visual workflow orchestration, SOC 2 Type II certified.

- • n8n: Open-source, self-hostable workflow automation.

AI/LLM Providers

- • OpenAI API (GPT): General-purpose

- • Anthropic Claude (Opus/Sonnet): Complex reasoning

- • Google Gemini : Multimodal processing

- • Perplexity: Research

Why These Platforms?

- Model-agnostic architecture: Not locked into a single AI provider

- Vendor independence: You own workflows; we don't gate-keep access

- Enterprise-grade security: SOC 2, GDPR, ISO 27001 compliance

- Scalability: Handle 1,000 or 100,000 operations/month

When We Use Custom Code

While platforms handle orchestration, we deploy custom code for:

- • Agentic environments with self-annealing capabilities

- • Complex data transformations beyond platform limits

- • Custom ML models or fine-tuned LLMs

- • Highly sensitive data requiring air-gapped processing

Integrations Support

We integrate with 1,000+ business tools via pre-built connectors and custom APIs:

CRM & Sales

Project Management

Communication

Don't see your tool? If it has an API, we can integrate it. We also support PostgreSQL, MySQL, MongoDB, and custom GraphQL/REST implementations.

2. AI Systems & Architecture

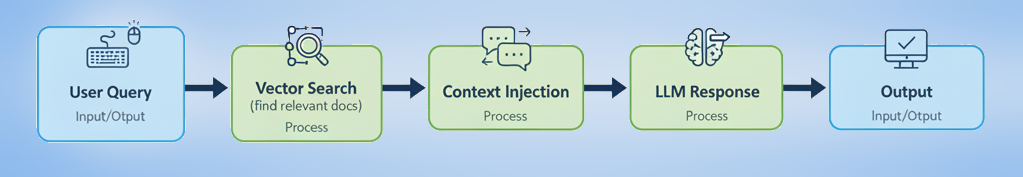

1. Retrieval-Augmented Generation (RAG)

Instead of relying solely on the AI's training data, we connect it to your knowledge base (documents, databases, CRM, etc.) to improve accuracy.

Result: 85-95% reduction in hallucinations compared to zero-shot prompting.

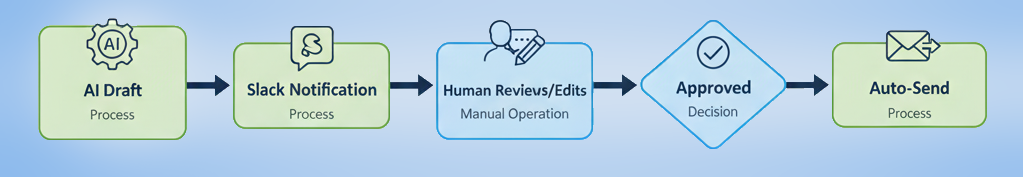

2. Human-in-the-Loop (HITL) Workflows

For high-stakes decisions (e.g., contract analysis), we implement human review checkpoints.

When HITL is Required

- Outbound sales emails

- Legal document generation

- Financial calculations

When HITL is Optional

- Internal data entry

- Categorization/tagging

- Research summaries

3. Hallucination Detection & Monitoring

We implement continuous monitoring to ensure system integrity:

-

Fact-checking pipelines Cross-reference outputs against source data automatically.

-

Consistency checks Comparing multiple AI responses to the same query for variance.

4. Error Handling Hierarchy

- Automatic retry: Retry with exponential backoff for transient errors (API timeouts).

- Fallback models: Switch to Anthropic if OpenAI fails.

- Human escalation: Alerts for critical errors for manual intervention.

- Graceful degradation: Reduced functionality instead of crash.

5. Customization Levers

- Prompt Engineering: Fine-tuning tone, length, and constraints.

- Tone (Professional, casual, empathetic, technical, ...)

- Length (Concise (50 words) vs. detailed (500 words), ...)

- Format (Bullet points, paragraphs, tables, JSON, ...)

- Constraints ("Never mention competitors", "Always include pricing", ...)

- Few-Shot Examples: Providing 3-5 input/output pairs to guide behavior.

- Fine-Tuning: Training on examples for domain specificity.

Standard Operating Procedures

Workflow Reliability Framework

Technical standards and methodologies applied to automation workflows to ensure operational uptime and logic integrity.

| Category | Technical Standard | Methodology | Operational Value |

|---|---|---|---|

| 1. Triggers | Idempotency Logic | Assignment of unique digital fingerprints to every data point. | Prevents duplicate actions, such as double-emailing leads or processing repeat invoices. |

| Smart Filtering | Implementation of pre-defined business criteria to qualify triggers. | Minimizes operational costs by ensuring AI resources are only spent on high-value signals. | |

| 2. Context | Data Sanitation | Automated removal of HTML tags, whitespace, and formatting noise. | Eliminates "Garbage In, Garbage Out" risks, ensuring high-quality model inputs. |

| Context Optimization | Condensation of large datasets into high-impact summaries. | Prevents model "forgetting" and keeps the AI focused on mission-critical information. | |

| 3. Prompting | System Persona | Hard-coding of professional protocols and behavioral constraints. | Guarantees brand consistency and ensures compliance with industry standards. |

| Few-Shot Learning | Embedding of curated "Input vs. Gold Standard Output" mapping. | Trains the model on specific corporate styles through example rather than instruction. | |

| Chain of Thought | Requirement for step-by-step logical analysis before outputting. | Drastically reduces hallucinations by forcing the AI to verify internal reasoning. | |

| 4. Validation | Structured JSON | Mandating AI responses be delivered in machine-readable code. | Enables seamless data integration between the AI and core business systems (CRM, ERP). |

| Negative Constraints | Explicit programming of "forbidden" actions and topics. | Provides a safety layer that prevents the AI from discussing competitors or sensitive data. | |

| 5. HITL | Confidence Thresholds | Routing of low-confidence AI outputs to a human review queue. | Ensures high-stakes tasks remain human-verified while automating routine operations. |

| Review Interface | Integration of custom Slack/Web dashboards for rapid approval. | Streamlines oversight, allowing team members to manage workloads with a single click. | |

| 6. Resilience | Auto-Retry | Development of "Self-Healing" loops for API timeouts. | Maintains 24/7 system uptime and prevents workflow breakage during outages. |

| Traceability Logs | Recording of every AI "thought process" in a secure database. | Provides a full audit trail for accountability, troubleshooting, and optimization. |

3. Quality Assurance & Safety

QA Process

Unit Testing

Validate individual workflow steps and API connections. Check error handling and retry logic. Confirm data transformations are accurate

Integration Testing

End-to-end runs with test data and edge cases (empty inputs, malformed data, API failures, etc.). Measure latency and performance.

Prompt Validation

Adversarial testing and hallucination audits. Validate prompt engineering.

Monitoring

Continuous monitoring of system performance, error rates, and user feedback. Prompt optimization reviews.

Performance Metrics (KPIs)

Accuracy Metrics

- Precision % correct outputs

- Recall % answers found

- Groundedness % source-aligned

Operational Metrics

- Latency Average trigger-to-completion time

- Throughput Ops per hour/day

- Reliability % 24/7 reliability

- Cost Efficiency Cost per op (API + Platform)

Business Metrics

- Time saved Hours/month manual work eliminated

- Cost saved Monthly labor costs eliminated

- Conversion rate % leads converted

- Revenue uplift Revenue increase

- Automation ROI (savings + gains - system costs) / system costs

We engineer real-time KPI dashboards tailored to your specific objectives, enabling you to monitor ROI and pinpoint strategic optimization opportunities across your automated systems and core processes. Custom monitoring solutions that track your defined metrics, not just the ones listed within these tables.

Safety Mechanisms

- Content Filters:

- OpenAI Moderation API: Flags hate speech, violence, self-harm, ...

- Custom blacklists: Block specific words, phrases, or topics

- Output validation: Regex patterns or modules to catch policy violations

- Human-in-the-Loop: High-risk outputs require approval before sending

- Access Controls

- Role-Based Access Control (RBAC): Limit who can modify workflows

- Audit logging: Track every change and who made it

- Two-factor authentication (2FA): Protect admin accounts

- IP whitelisting: Restrict access to specific networks

- Fail-Safe Mechanisms

- Rate limiting: Prevents abuse and ensures fair usage

- Budget caps: Limits spending to prevent financial loss

- Kill switches: Emergency stop for critical systems

- Rollback capability: Revert to previous versions

4. Infrastructure & Deployment

Hosting Options

- Cloud-Based (Default): Make.com (EU/US), OpenAI API.

- Self-Hosted: n8n, Docker, Air-gapped options.

Redundancy & Outages

Make.com Outage Fallback

Critical workflows replicated on n8n. Auto-failover routes traffic to backup platforms automatically.

OpenAI API Fallback

Circuit breaker pattern detects failure and switches engine to Anthropic Claude or Google Gemini.

Initial Deployment

- Staging environment setup: Clone production config for safe testing.

- Credential provisioning: Secure API keys and OAuth tokens.

- Access control configuration: Set granular user permissions.

- Initial data migration: Import historical data if needed.

- User training: Walkthrough of system operation.

- Go-live cutover: Switch from manual to automated processes.

Updates & Maintenance

- Versioning: Workflows that are version-controlled

- Rollback: Revert to previous version if needed.

- Zero-downtime: New version deployed in parallel.

- Notifications: You're notified 48 hours before any update.

- Testing: All updates tested in staging.

5. Data Security & Compliance

GDPR Compliance

We implement GDPR best practices as a Data Processor under your instructions.

Processing Agreements

- • DPAs executed with all clients

- • Documentation of sub-processors

- • Purpose limitation & storage caps

Data Subject Rights

- • Right to access & rectification

- • Right to erasure (Right to be forgotten)

- • Machine-readable data portability

Encryption & Access

TLS 1.3 for data in transit. AES-256 for data at rest. OAuth 2.0 for all secure credential storage. Role-based access control (RBAC) is enforced across all platforms.

6. Troubleshooting & Edge Cases

Inconsistent Responses?

Diagnosis: Check prompt variability, review temperature settings (0.1-0.3 recommended), and ensure RAG retrieval is fetching the same context.

Solution: Lower temperature to 0.0 for deterministic results, add few-shot examples, or switch to GPT-4o for higher consistency.

System Latency?

Benchmarks: AI Email (3-8s), Custom Agent (10-30s), Document Analysis (30-60s).

Strategy: Group requests via batch processing, implement caching for frequent queries, or use faster models like Claude 3 Haiku for simple tasks.

Integration Breaks?

Procedure: Monitoring detects failure ? System auto-pauses ? Assessment of provider changelog ? Update implementation ? Verify in staging ? Deploy fix.

API rate limits?

Diagnosis: Workflow fails with "429 Too Many Requests" response from a third-party provider.

Solution: Implement intelligent queuing, add jitter between requests, or upgrade API tiers for higher throughput.

7. Glossary: Key Terminology

AI & Machine Learning Terms

Automation & Integration Terms

Quality & Reliability Terms

Infrastructure & Security Terms

8. Contact Technical Support

For Existing Clients

Contact your support engineer

Target Response Times

Critical: 2-4 business hours

Normal: 8-24 business hours

Please include error logs, screenshots, and timestamps for faster resolution.

For Prospective Clients

Discuss partnership options

BRING THIS TO THE CALL:

- • Current tech stack (CRM, databases)

- • Data volume estimates

- • Compliance requirements (GDPR, etc.)